Vieles kommt neu. Manches kommt gar nicht mehr.

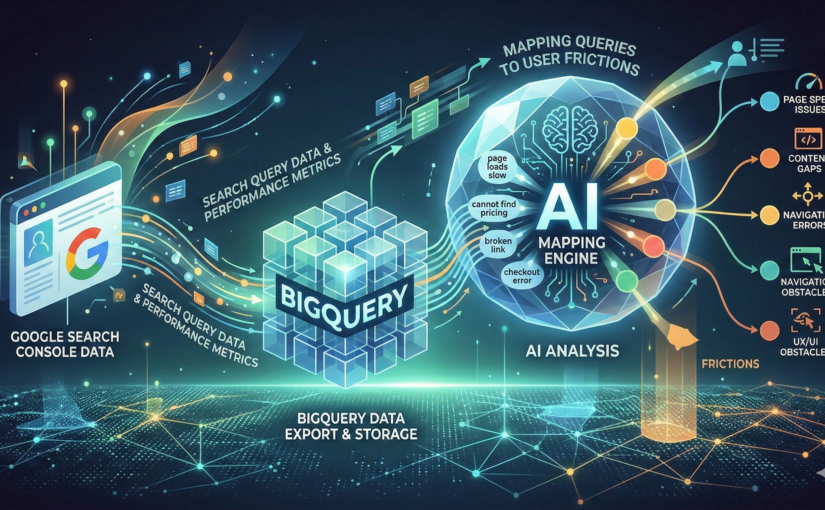

Externe Suchdaten als Diagnose für digitale Benutzerführung

Dieser Beitrag wird etwas über die Grenzen der reinen Suchmaschinenoptimierung hinausgehen. Aber tun wir SEOs das nicht immer – über den Tellerrand blicken, auf die User, den Intent, die Nutzbarkeit und Nutzung der Website?

Also ist es vermutlich gar nicht so schlimm, dass ich dich hier mit auf eine kleine Reise raus aus dem reinen SEO-Land und hinein in das liebliche Land der Friktionen entführe.

So nutzt du GSC-Daten als Diagnose für Probleme in der Benutzerführung