Stylesheets included via link tags can cause rendering delays because the browser waits for the CSS to be fully downloaded and parsed before displaying any content. This can result in significant delays, especially for users on high latency networks like mobile connections.

To optimize page loading, PageSpeed recommends splitting your CSS into two parts. The first part should be in-line and responsible for styling the content that appears above the fold (initially visible portion of the page). The remaining CSS can be deferred and loaded later, reducing the impact on initial page rendering.

What is „Above the fold“?

„Above the fold“ is a term derived from the print newspaper industry but has also been adapted for the digital realm. In the context of newspapers, „above the fold“ refers to the content that appears on the top half of the front page, visible when the newspaper is folded and displayed on a newsstand. This area is considered prime real estate because it catches the attention of passersby and can influence their decision to purchase the newspaper.

In the digital world, „above the fold“ refers to the portion of a web page that is visible without scrolling down. It represents the content that appears on a user’s screen immediately upon loading a webpage. Since users typically see this content first, it is crucial for engaging their attention and encouraging them to continue exploring the website or taking desired actions.

The exact position of the fold can vary depending on factors such as screen size, resolution, and browser settings, as well as the type of device being used. However, the concept of „above the fold“ remains relevant as it highlights the importance of capturing users‘ attention with compelling and relevant content in the initial viewable area of a webpage.

Finding the CSS classes being used above the fold

To find the CSS classes that are used above the fold, you first need to define what the fold actually is to you. You may chose to set the Viewport height of the browser (ATF „border“) to 950px for desktop, or 892px for mobile (Google Pixel 7 Pro).

To play it safe, you could simply go to 1000px for viewport height and have that as your ATF.

Any CSS classes used on HTML elements that are visible within this 1000px area would be considered critical CSS. To have this content shoown as quickly as possible without having to wait for the browser to download and parse the CSS.

You can read more about this on https://web.dev/defer-non-critical-css/

Find critical CSS with my nodejs script

I have put together the following nodejs script. It will help you find CSS classes that are being used on HTML elements which are visible above the fold.

When calling the script, you have four possible named arguments.

sitemapUrl <- required

viewportWidth <- defaults to 1000. Only enter an integer. do not append "px"

viewportHeight <- defaults to 1000. Only enter an integer. do not append "px"

userAgent <- defaults to My-User-Agent. Do not use quotes.Code-Sprache: PHP (php)All of them are pretty self-explanatory. The sitemapUrl argument is required, all other arguments are optional.

How it works

The app will get all URLs from the sitemap you provided with the sitemapUrl argument. This can either be a sitemap index file, or a regular sitemap file. In case it is a sitemap index file, it will extract all URLs from the sitemaps referenced in the sitemap index file.

After that, it will loop through all URLs it has extracted and open a puppeteer headless browser. It will send the UserAgent string you gave, and set the Viewport of the browser to the dimensions you have provided. If none are provided, the default values are being used.

For each URL, all CSS classes used on HTML elements which are visible within the viewport area are being extracted.

After all URLs have been rendered and the CSS classes have been extracted, the app will write a file called domain-name.com-ATF-CSS.csv. All CSS classes used above the fold on your entire website are added to the file for you to work with.

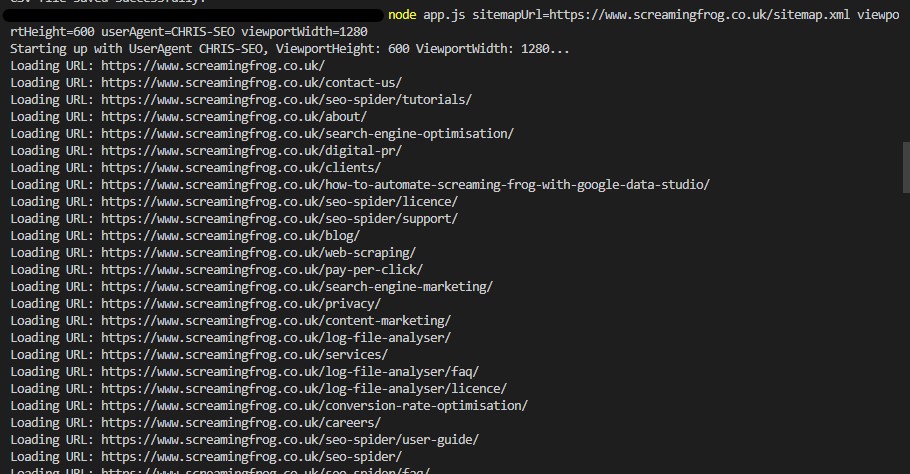

Here’s an example of what it did for the website screamingfrog.co.uk.

The script is starting up and logging to the console which URL it is currently working on.

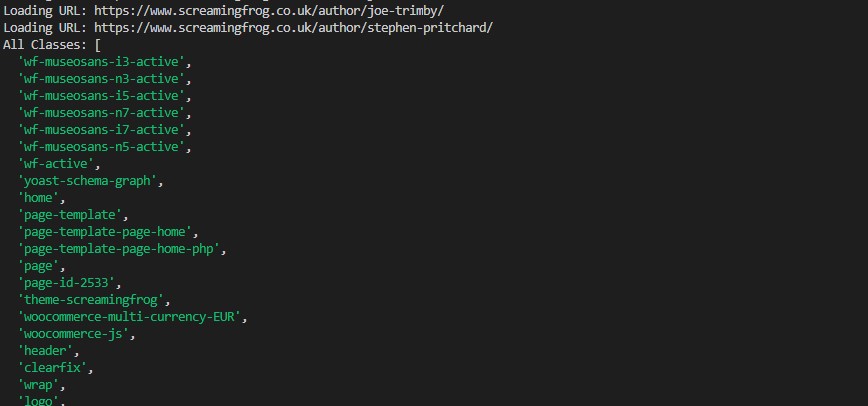

After all URLs have been processed, the found CSS classes are being logged to the console.

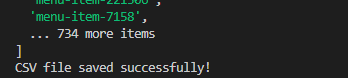

When it is done, it will let you know that the file has been saved.

This is what the content of the file looks like.

How to use it?

To use the script, you need to have nodejs installed on your machine.

Create a directory on your computer for this script to run in. For this example, we will call it critical-css.

mkdir critical-cssNow open your code editor and open the directory you have just created.

Also, open your console / terminal and cd into the directory.

For this script to run, you need to install a few dependencies.

npm install puppeteer cheerio xml2js sitemapper fsOnce you are done with this, you are ready to create the script file. In your editor, create a new file called app.js and open it. Copy the code below and paste it into your JS file. Save it 🙂

Now you simply go back to your terminal and call the script.

node app.js sitemapUrl=https://www.screamingfrog.co.uk/sitemap.xml viewportHeight=800 viewportWidth=800 userAgent=CHRIS-SEOCode-Sprache: JavaScript (javascript)You can set the viewportHeight and viewportWidth, so you can check what CSS is visible in different scenarios.

The code

You can find this code on Github. Feel free to contribute 🙂

https://github.com/chrishaensel/critical-css

The code below is the first version. The most recent one is on Github.

Copy and paste this code into your app.js file.

/*

Author: Christian Hänsel

Contact: chris@chaensel.de

Description: Gets the CSS classes used above the fold throughout your website, using all URLs referenced in the sitemap you provide.

Date: 11.06.2023

*/

const puppeteer = require('puppeteer');

const cheerio = require('cheerio');

const xml2js = require('xml2js');

const Sitemapper = require('sitemapper');

const fs = require('fs');

// Get the command-line arguments

let sitemapUrl, userAgent, viewportHeight, viewportWidth;

// Getting the named arguments

process.argv.slice(2).forEach((arg) => {

const [key, value] = arg.split('=');

if (key === 'sitemapUrl') {

sitemapUrl = value;

} else if (key === 'userAgent') {

userAgent = value;

} else if (key === 'viewportHeight') {

viewportHeight = parseInt(value, 10);

} else if (key === 'viewportWidth') {

viewportWidth = parseInt(value, 10);

}

});

// Use default values if arguments are not provided

if (!sitemapUrl) {

console.error('Error: sitemapUrl is missing'); // You really need to pass a sitemap URL

process.exit(1); // We exit. Seriously, we need the sitemap URL...

}

if (!userAgent) {

userAgent = 'My-User-Agent'; // The default user agent string to be used when none is given

}

if (!viewportHeight) {

viewportHeight = 1000; // The default viewportHeight to use when none is given

}

if (!viewportWidth) {

viewportWidth = 1000; // The default viewportWidth to use when none is given

}

// Validate the command-line arguments

if (!sitemapUrl) {

console.error('Please provide a sitemap URL as the first argument.');

process.exit(1);

}

// get the URLs from the sitemap. If it's a sitemap index file,

// it will get the URLs from all sitemaps referenced in the sitemap index.

async function getUrlsFromSitemap(sitemapUrl, userAgent) {

const sitemap = new Sitemapper({ requestHeaders: { 'User-Agent': userAgent } });

try {

const sites = await sitemap.fetch(sitemapUrl);

return sites.sites;

} catch (error) {

throw error;

}

}

async function scrapeClasses(urls) {

const browser = await puppeteer.launch({ headless: "new" });

const page = await browser.newPage();

await page.setUserAgent(userAgent);

const allClasses = []; // Array to store all the used classes

console.log("Starting up with UserAgent " + userAgent + ", ViewportHeight: " + viewportHeight + " ViewportWidth: " + viewportWidth + "...");

for (const url of urls) {

await page.setViewport({ width: viewportWidth, height: viewportHeight }); // Set your desired viewport size

console.log("Loading URL: " + url);

await page.goto(url, { waitUntil: 'networkidle0' }); // Load the page and wait until it's fully loaded

// Evaluate the JavaScript code in the page context to collect CSS classes

const classes = await page.evaluate(() => {

const elements = Array.from(document.querySelectorAll('*')); // Select all elements on the page

// Filter out the elements that are above the fold

const aboveFoldElements = elements.filter(element => {

const rect = element.getBoundingClientRect();

return rect.top >= 0 && rect.bottom <= window.innerHeight;

});

// Collect the CSS classes from the above-fold elements

const classesSet = new Set();

aboveFoldElements.forEach(element => {

const elementClasses = Array.from(element.classList);

elementClasses.forEach(className => {

classesSet.add(className);

});

});

return Array.from(classesSet);

});

// Add unique classes to the allClasses array

classes.forEach(className => {

if (!allClasses.includes(className)) {

allClasses.push(className);

}

});

}

await browser.close();

return allClasses;

}

// Call the function to fetch and process the sitemap

(async () => {

try {

const urls = await getUrlsFromSitemap(sitemapUrl, userAgent);

const uniqueUrls = [...new Set(urls)];

const allClasses = await scrapeClasses(uniqueUrls);

console.log('All Classes:', allClasses);

// Sort the classes alphabetically

allClasses.sort();

// Convert allClasses array to CSV format with each class on a separate line

const csvContent = allClasses.join('\n');

const url = new URL(sitemapUrl);

const domain = url.hostname;

const csvFileName = `${domain}-CSS-ATF.csv`;

// Write CSV content to a file

fs.writeFile(csvFileName, csvContent, 'utf8', (err) => {

if (err) {

console.error('Error writing CSV file:', err);

} else {

console.log('CSV file saved successfully!');

}

});

} catch (error) {

console.error('Error:', error);

}

})();Code-Sprache: JavaScript (javascript)If you have any suggestions, encounter any bugs or simply want to tell me that you love me: Leave a comment below 🙂